For those of us born in the 1970s, last week’s news of Atari’s demise came as a sentimental shockwave. Atari was our first video game console. It introduced us to Space Invaders and Missile Command and Pitfall. More importantly, it taught us how to play electronically, forming our habits and blistering our thumbs. To see it filing for bankruptcy was to see a part of our past wither: Atari was like a childhood bedroom we no longer occupied, but whose mere existence comforted us.

The real tragedy here, though, isn’t about the past. It’s about the future. Many of the large corporations currently leading the video game industry are making all of the same mistakes that toppled Atari some three decades ago, consigning it to oblivion. That is, what we’re witnessing now is not only Atari’s requiem, but a reminder that the ailments that weakened and finally killed Atari are still rampant.

I glimpsed that sickness back in 1982, when I was six and Atari was still the biggest name in the video game market. Early that year, the company announced it would release by Christmas a game based on Steven Spielberg’s E.T. the Extra-Terrestrial. My birthday is in early November, but I couldn’t imagine any other gift I wanted more; in a rare feat of forbearance, I told my parents that I would wait for more than a month for the game to come out. When it finally arrived, I plugged it into the console and, my hands trembling, began to play. Within three minutes, I realized that the game, which focused on E.T.’s efforts to fall into and extricate himself from large holes in the ground, was an utter disaster. Then I started crying. Video games had ruined my birthday.

And not, I suspect, mine alone. Since the E.T. debacle,1 game publishers have remained committed to blockbuster, big-budget games that are heavy on hype and light on innovation: from 1994’s Shaq Fu, in which Shaquille O’Neal fought Egyptian mummies in Japan; to 1999’s Superman: The New Adventures (aka “Superman 64”), which was so shoddily designed that the Man of Steel frequently wandered off the world of the game and into a black abyss; to 2007’s Lair, which would have worked beautifully as a short animated film about dragons but paid very little attention to pesky things like game play or level design.

This sort of business model may work for the film industry, where, to make a lot of money, a lot of money must first be spent. There are plenty of blockbuster video games that cost tens of millions to make, but it’s still the sort of industry where an indie hit could bring a windfall: In 2009, a Swedish programmer named Markus Persson used his spare time—and spent virtually nothing—to create a crudely animated sandbox game called Minecraft, eventually releasing it for a small download fee while the game was still in development. At its peak, more than a year after its release, the game was being downloaded every three seconds, earning its creator $15,000 every hour. Within 18 months, Persson’s game studio announced it had hit the $80 million revenue mark.

Persson, of course, is the exception. Even in an industry like video games, still busy being born, rare are the tiny passion projects that spawn a global pop culture phenomenon. Still, you’d expect video game developers to look at Minecraft and realize there’s a lot of very good money to be made by simply cultivating people like Persson and supporting their visionary designs. But game studios aren’t scrambling to find the next Minecraft. Instead, they’re looking for the next E.T.—and when they find it, they're willing to pay a fortune in licensing and development fees. Game studios are taking the same approach, increasingly, as movie studios: to create and replicate big titles, advertise the hell out of it, and hope you can make a big enough splash to make a lot of money quickly. As in Hollywood, this logic sometimes pays off, and sometimes doesn’t. And when it doesn’t, it can undermine even the most solid and beloved franchises in the industry. Anyone who has picked up Halo 3: ODST, say, or Assassin’s Creed: Revelations, knows well the heartbreak that comes with expecting creative brilliance and instead finding careless, tedious titles designed with little else but greed in mind.

It doesn’t have to be this way. Valve Corporation, a game studio valued at $2.5 billion, is one of the industry’s most successful companies, thanks to popular series like Half-Life, Counter-Strike, and Portal. Valve has no corporate hierarchy, and is famous for encouraging and rewarding innovation: When a team of amateur programmers modified Half-Life to create a free first-person-shooter game of their own, Valve offered them a job, a collaboration that eventually led to Team Fortress 2, one of the company’s best-loved games. Most contemporary video game studios, however, have less in common with Valve than they do with Atari in its heyday, an entertainment giant more interested in safe bets than experimentation.

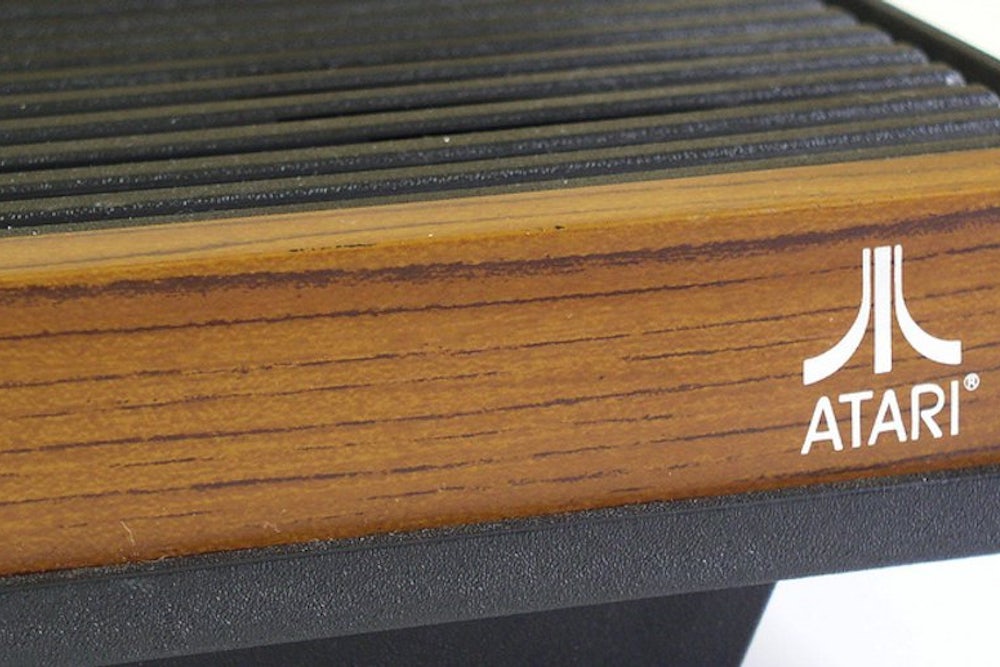

Atari, of course, is remembered less as a video-game publisher than a maker of consoles—specifically the bulky, wood-paneled Atari 2600.2 And it was as console makers that the company made some of its most glaring mistakes. In 1982, for example, five years after the introduction of the iconic 2600, the company released the 5200, a sleeker console with advanced technical specifications. Within two years, it had been pulled off the market. Consumers, Atari learned, weren’t engineers. They didn’t care much about slight improvements in processing power or graphic display, and they didn’t want their games to become obsolete every time a new, incompatible console came out. Nor did they particularly like video game consoles that tried to be something more than that: In 1987, after a decade of trying to break into the PC market, Atari released the XE gaming system, which came with a detachable keyboard and could double as a home computer.3 It, too, was soon discontinued.

Which, sadly, did little to dissuade Atari’s successors from making the same mistakes. Generation after generation of console makers—Sega, Nintendo, Sony, Microsoft—built faster and better machines, erroneously convinced that it was shiny hardware, not good games, that built brand loyalty. And the shinier the hardware got, the more money console makers were losing: Sony is widely believed to be losing money on each PlayStation 3 it sells. The same is true for Microsoft's Xbox 360. Even Nintendo, which seemed to break the spell with the Wii—its 2006 console which shunned fancy processors and invested instead in motion-based game design, to great acclaim and financial success—is now back to its bad old habits: The WiiU, released late last year and featuring such innovations as a built-in screen for each controller, is a significantly more impressive bit of hardware and much less fun to play than the original console. It also costs nearly $100 more per unit than the Wii did upon its release. By year’s end, we’re likely to see new consoles from Sony and Microsoft, which, if rumors are to be believed, will include biometric controllers and uber-advanced microprocessors. This kind of hardware gluttony contributed to Atari’s demise. Now, it’s slowly killing its heirs: Having trained their consumers to expect a brand new console every few years, Sony and Microsoft have watched in horror as their products’ shelf life grows shorter, production costs soar, and fierce competition caps the retail price well below the point of profit. The result? Last year was one of the video game industry’s worst in a decade, due largely to a precipitous drop—32 percent—in console sales, and to competition from—you guessed it—cheaper, more nimble competitors who aren’t burdened by hardware costs (mobile-phone app producers, for instance).

How to avoid further decline? Learn from Atari: While the company indulged in the twin addictions of bloated hardware and costly, stodgy games, its young and sharp competitors understood that the only thing that mattered in the industry were great games, which didn’t necessarily mean extravagant games or games powered by faster, more powerful computing but games that were a lot of fun to play. Nintendo and Sega rode that insight for nearly a decade with Sonic the Hedgehog and Super Mario Bros. and other iconic titles, taking the industry to heights too great for Atari to follow. Then Nintendo and Sony, too, allowed the engineers and the marketing managers to overshadow the game designers, opening the door for Sony and Microsoft. We’ve come full circle: The industry produces stellar machines and impressive games with stunning graphics and intricate mechanics, but if you’re a longtime gamer who's wondering about the future of the industry, consider the last time you bought a new game and felt the same giddy wonder that accompanied games like Space Invaders4 and Pitfall. Chances are, it’s been a while. Or perhaps it occured this very morning—on your cell phone.

As we mourn Atari, then, let us remember that whatever the other reasons for its demise—corporate mismanagement, market conditions, broader economic conditions—the chief cause was joylessness. No longer able to compete with larger and wealthier companies, it largely spent the last two decades of its life introducing its old classics to new platforms, like computers and later smartphones. It turned out not to be a viable strategy for survival. As it lays dying, Atari reminds us that although video games are a nascent medium, a new art form, and the product of a massive industry, they’re still first and foremost games. And games must be fun to play.

Liel Leibovitz is an assistant professor of digital media at NYU and a senior writer for Tablet Magazine.

Before too long, copies of the game, originally retailing for $49.95, were selling for about a dollar. And most were probably destroyed: less than a year after the game’s release, Atari dumped 14 trucks’ worth of cartridges in a New Mexico landfill. Fans, seeking some sort of divine retribution, soon started a rumor that most of those cartridges were of one game in particular, E.T.

The circuitry required to make it work, by the way, was the size of a cigarette box; the machine got its furniture-like design after a team of engineers decided that an electronic gadget had a much better chance of selling if it looked and felt more substantial.

Atari wasn’t the last video game company to try and expand its console’s capabilities. In 1988, following the immense success of its first-generation machine, Nintendo introduced a gadget that turned the console into a precursor of the Internet, allowing users to check weather, stocks, and news. It was soon recalled, but the idea lives on: Both Sony and Microsoft's consoles provide Internet access.

The video game industry owes Space Invaders a particular debt: It was the first game to feature the concept of the high score. Before the game’s introduction, in the summer of 1978, video games, like pinball machines, rewarded successful players with a free game. Space Invaders realized that most people cared more for glory—one’s initials on the leaderboard—than material rewards.