The joke goes that only two industries refer to their customers as “users.” But here's the real punch line: Drug users and software users are about equally likely to recover damages for whatever harms those wares cause them.

Let’s face it. Dazzled by what software makes possible—the highs—we have embedded into our lives a technological medium capable of bringing society to its knees, but from which we demand virtually no quality assurance. The $150 billion U.S. software industry has built itself on a mantra that has become the natural order: user beware.

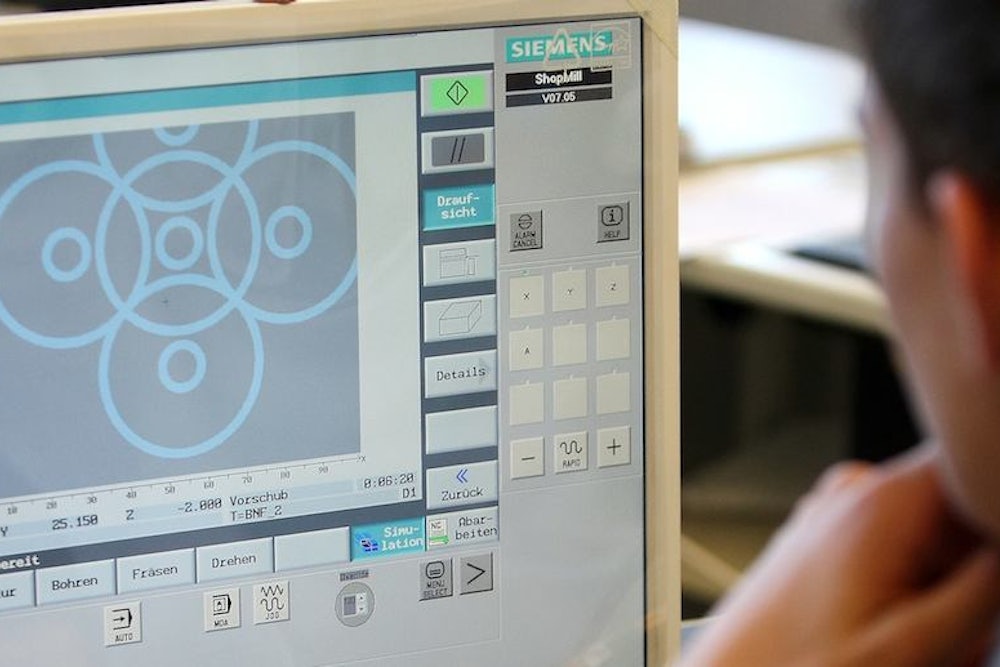

Unfortunately, software vulnerabilities don’t just cost end-users billions annually in antivirus products. The problem is bigger than that. In 2011, the U.S.government warned critical-infrastructure operators about an exploit that was targeting a stack overflow vulnerability in software deployed in utilities and manufacturing plants around the world. In 2012, a researcher found almost two dozen vulnerabilities in industrial control systems (ICS) software used in power plants, airports and manufacturing facilities. In its 2013 threat update, Symantec, the world’s largest security software corporation, surprised no one when it announced that criminals were finding and exploiting new vulnerabilities faster than software vendors were proving able to release patches. Cybersecurity is a very big set of problems, and bad software is a big part of the mess.

How did we get here?

The rapid evolution of software technology and the surge in the total number of computer users actually led early commentators to warn of software vendors’ increasing exposure to lawsuits —and the “catastrophic" consequences to ensue. But history has gone the other way. Operating within a “legislative void,” the courts have consistently construed software licenses in a manner that allows software vendors to disclaim almost all liability for software defects. Bruce Schneier, perhaps the most prominent decrier of the current no-liability regime for software vendors, puts it simply: “there are no real consequences for having bad security.” The result is a marketplace crammed with shoddy code.

As users, we tolerate defective software because defective software works most of the time. And we get it much faster and with a great many features. Partly in response to consumer appetite, timely release and incremental patching have become key features of the industry’s “fix-it-later” culture. Software companies look for bugs late in the development process and knowingly package and ship buggy software with impunity. Meanwhile end users are slow in acknowledging vulnerabilities, use patches too infrequently, and fail to timely deploy published updates.

Some experts fear that nothing short of a digital Pearl Harbor—a large-scale attack that exploits critical security holes in our industrial control systems—will create the momentum needed to trigger government regulation of and private investment in quality code.

If that ends up being the case, it won’t be for lack of theorizing. Suboptimal code has been recognized as a problem for decades. Certainly, there are defenders of the status quo who argue that holding software providers liable for their code would raise costs and stifle innovation. But legal academics have spent thirty years disagreeing with that proposition and dreaming up liability schemes designed to force software vendors to shoulder some of the costs long borne entirely by users.

The software liability debate has retained its basic shape over the years, but the harms giving rise to the debate have clearly evolved in that time. The earliest software liability discussions focused on embedded software malfunctions that led to physical injury or death. Concern expanded to software applications used to infringe copyright. With the explosion in cybercrime and cyber-espionage, and rising fears of cyberterrorism, attention has converged on the vulnerabilities lurking in shoddy code.

The shift in kind can also be understood as a shift in scale, with software harms expanding in reach from the end-users who seek to benefit from the deployment of particular software, to third parties affected by its unlawful use, and finally to all actors in an increasingly interconnected and increasingly insecure cyber ecosystem.

These shifts are significant in that they pull the software liability discussion in two directions, compelling us to start holding vendors at least partially accountable for poor software development practices but also complicating any attempt to construct a coherent liability regime. For example, software insecurity can be likened to a public health crisis. The fact that a single vulnerability can give rise to untold numbers of compromised computers and harms that are difficult to cabin makes dumping costs entirely on end users unreasonable as a policy matter. To borrow the words of law professors Michael Rustad and Thomas Koenig, the current paradigm is one in which “[t]he software industry tends to blame cybercrime, computer intrusions, and viruses on the expertise and sophistication of third party criminals and on careless users who fail to implement adequate security, rather than acknowledging the obvious risks created by their own lack of adequate testing and flawed software design.” A more reasonable and balanced system should be possible.

On the other hand, any attempt to systematically hold vendors accountable for vulnerabilities must build in realistic constraints, or risk exposing the industry to crushing liability. Commentators who advocate for software vendor liability have a common refrain: the software industry should not be categorically exempted from the safety standards imposed on other industries. And while that is certainly true, there is danger in over-relying on the analogies so often drawn between software and other, more-conventional products and services.

The most common analogy is the car. And there are legitimate parallels between the vehicle safety crisis of the 1960s and today’s software security conundrum. Then, state and federal courts were reluctant to apply tort law even where automobile-accident victims claimed their injuries resulted from the failure of manufacturers to exercise reasonable care in the design of their motor vehicles. Over the next thirty years, however, the courts did an about-face: they imposed on automobile manufacturers a duty to use reasonable care in designing products to avoid subjecting passengers to an unreasonable risk of injury in the event of a collision; applied a rule of strict liability to vehicles found to be defective and unreasonably dangerous; and held automobile manufacturers accountable for preventing and reducing the severity of accidents.

Yet to insist that software defects and automobile defects should be governed by substantively similar legal regimes is to ignore the fact that “software” is a category comprising everything from video games to aircraft navigation systems, and that the type and severity of harms arising from software vulnerabilities in those products range dramatically. By contrast, automobile defects more invariably risk bodily injury and property damage. To dismiss these distinctions is to contribute to an increasingly contrived dichotomy, between those who see the uniqueness of software as an argument for exempting software programs from traditional liability rules altogether, and those who stress that software is nothing special to claim that the road to software vendor liability lies in traditional contract or tort remedies.

As it turns out, with respect to the paradigm shift that led to liability for automobile manufacturers, the courts were only one component of what Ralph Nader has called “an interactive process involving both the executive and legislative branches of government as well as the forces of the marketplace.” Specifically, in 1966, in response to mounting public pressure and political momentum directly attributable to Nader’s highly visible consumer advocacy efforts, Congress passed the National Traffic and Motor Vehicle Safety Act, which vested a federal agency with the power to proactively promulgate and enforce industry safety regulations. In 1987, law professor Jerry Mashaw and lawyer David Harfst described the statute as nothing short of “revolutionary”:

Abandoning the historic definition of the automobile safety problem as one of avoiding accidents by modifying driver behavior, the 1966 Act adopted an epidemiological perspective. Reconstituted, the safety issue became how to modify the vehicle (environment) so that the interaction of the passenger (host) and the deceleration forces of accidents (agent) produced less trauma.

Reconceiving software security is as necessary today as reconstituting the automobile safety issue was yesterday. And just as imposing vehicle manufacturer liability required a shift in our auto safety paradigm three decades ago, fairly allocating the costs of software deficiencies between software vendors and users will require examining some of our deep-seated beliefs about the very nature of software security, as well as questioning our addiction to functionality over quality. Recalibrating the legal system that has grown out of those beliefs and dependencies will, in turn, require concerted action from Congress and the courts.

It is common enough to pay lip service to the idea of committing to a system-based view of cyber security. We litter our cyber security discussions with medical and ecological metaphors, and use these to great effect in arguing for comprehensive cyber surveillance measures and increased public-private data sharing. But most security breaches are made possible by software vulnerabilities. So the real question is this: when the “body” breaks down, when the “environment” fails, will we put our money where our collective mouth is? Will a nuanced view of the interdependent forces at play guide us in determining who must pay when things go wrong and who carries the risks associated with bad software?

Over the next month, in a series of weekly installments, I’ll be exploring whether and how we might hold software vendors liable for the quality of their code. Throughout, I’ll be examining what makes software and software harms distinctive, and assessing how a regime that holds software vendors liable for the flaws in their code or coding practices could be tailored to account for these features.