In the last few years, technological change has so closely tracked

speculative fiction’s recent predictions that describing a technological

development as “sci-fi” doesn’t mean very much. That said, in the recent case of

FBI vs. Apple—where the

world’s second most valuable private company finds itself pitted against the

federal government over the matter of de-encrypting one of the San

Bernardino shooters’ iPhones—it’s hard not to conjure the pessimism of

cyberpunk dystopia. Apple has become a staunch defender of citizens’ civil

liberty, and the FBI is advocating yet another expansion of the security state.

It’s like a William Gibson novel, only more depressing.

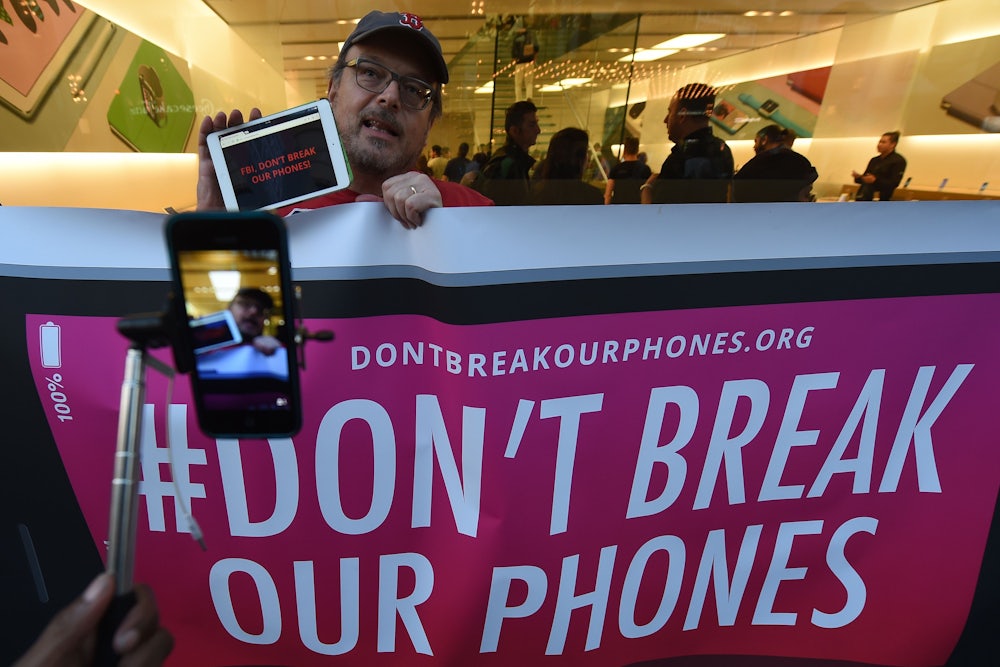

Their battle, however, is likely meant to attract attention. For the FBI, it’s to set a precedent about access to encrypted data, and, for Apple, the goal is to preserve its reputation as a company that places its users’ interests above all else. The details, however, are unsurprisingly arcane and technical.

It began with the awful San Bernardino terrorist attack. The two shooters’ phones were recovered, and in the process of investigating, the FBI felt there was a need to look into Syed Farook’s phone in particular in order to see if he was connected to ISIS. Essentially, the FBI has been unable to access the contents of the phone, partly because Farook disabled online backups 6 months before the attacks, and partly due to an iPhone security feature that erases everything on the device after too many unsuccessful attempts at unlocking. The government is thus requesting that Apple create a modified version of its iOS in order to access the data on the phone. For their part, the FBI claim that the workaround would only affect one phone, and that there is a historical precedent for owners of technology to assist in investigations—as is the case with wiretapping.

Apple has rejected the request for a number of reasons: partly, they say, because it unduly taxes Apple’s resources; partly because, if that precedent is set, more and more requests are sure to follow; and partly due to some generous interpretations of the first and fifth amendments—specifically, that code is speech, and forcing Apple to create code is like forcing them to create particular kinds of speech. (The notion is already being contested.) Apple’s most convincing argument, and where it has found most support, however, is in the idea that the existence of a tool that can crack a phone’s encryption undermines the security of all iPhones, which is true. The mere existence of tools that can break encryption—especially when those tools move back and forth between a company and the intelligence community—radically increases the chance that encryption will be broken for everyone. But with governments pushing for greater surveillance, and digital media companies producing their own forms of intrusion, who, exactly, is meant to defend citizens?

For its part, Apple has been clear that it wishes to defend consumers. And this week, a judge sided with Apple in a similar but separate case, where the U.S. government wants Apple to create a “bypass device” to get into a drug suspect’s phone. But it seems wise to keep in mind that in the past few years, social media and other tech companies have worried consumers as much as the state. Data isn’t safe with private companies either, as large-scale hacks (eBay and Target, to name two) still happen; there’s also the fact that social media relies on users giving up data that is then used to target them in increasingly specific ways.

Unsurprisingly, Apple has found allies in similarly enormous tech companies: Google, Facebook, and Microsoft—each a holder of massive amounts of consumer data—have all lined up behind Tim Cook’s view of the FBI’s request. Google and Facebook in particular have businesses predicated on the trading of customer data to advertising clients, in exchange for ostensibly “free” services. Though each company has made various privacy gaffes—from Google executive Eric Schmidt’s comments that “those who have nothing to hide” have nothing to fear, to Facebook’s poor protection of user details—what is perhaps more worrying is the underlying ethos: that to be a person in the digital age, you have to render yourself visible—to advertisers, trackers, employers, and the like.

In the legal brief it filed, Apple made it clear that the government can already access the backed up content of an iPhone. Moreover, Apple’s decision to encrypt its phones was deliberate. Apple’s intentions are inscrutable: Whether or not it actually cares about privacy is secondary to the PR function of their stance in this case—not to mention the exoneration from culpability for any illegal activities facilitated by Apple’s tech. Cupertino’s position may sound morally correct, but it’s also just good business sense. Apple gets to pitch itself as the company so concerned with privacy it’s willing to take on the federal government.

But a vague accusation of hypocrisy is beside the point. All engagements with governments and large corporations are ambivalent; we freely trade benefits and costs. The key is in finding the right balance. The real issue is that, when it comes to being protected from the unchecked exercise of power—whether political or economic—citizens are squeezed from both ends.

A public-facing online presence has quickly become a necessary part of economic life, which means, correspondingly, that the collection of that data is now part and parcel of employability and social capital. On the other hand, regardless of whether or not one feels post-9/11 surveillance was justified or excessive, the actual increase in both the scope and depth that was revealed by the Edward Snowden leaks is inarguable. What this means: Neither the market nor the state seems to have an incentive to protect anyone’s privacy—no one seems to believe that intrusions are themselves violations.

For what it’s worth, I think asking Apple to break encryption is wrong. The trouble with giving security services more power is really that the use of such technology always widens, and always seems to target the already marginalized. Consider: In the 1960s the Civil Rights movement was tracked through phone tapping, while in 2014 Ferguson protestors were surveilled by the FBI. We’re caught between the proverbial rock and hard place.

What is clear is that some kind of legal, or even extra-legal protection is necessary. That is, either Congress needs to pass legislation more in line with contemporary technology—current arguments are relying on interpretations of 1789’s All Writs Act—or that digital companies need to adhere to the rules of some kind of neutral body dedicated to privacy, as the legal profession itself polices practitioners of law. (It’s worth noting that both Democrats and Republicans have supported the expansion of the surveillance state—both Hilary Clinton and Marco Rubio, for example, have called for an expansion of surveillance in the past two years; ordinary citizens are lacking for advocates.)

So, in this small way, defending privacy falls to Apple—a company with over $200 billion in cash reserves that likely only cares about its reputation and bottom line. Apple gets to be a savior for reasons less noble than they initially appear. After all, regardless of what Cook et al actually believe, Apple makes almost all of its mountains of money from the sale of its hardware. Unlike Google and Facebook, it thus has much less economic incentive to try and mine customer data. (If Apple’s hardware growth stalls, however, that may change.) If Apple’s position is mostly a function of economic incentive, and politicians aren’t sympathetic, it’s impossible to answer the real question here—who’s looking out for citizens?—in a way that’s not depressingly in line with our most dystopic imaginings.