We live in the age of AI, goes the common refrain. The phrase can be found in the titles of books and in YouTube videos by public figures as far-ranging as the late Henry Kissinger and Robert Downey Jr. It seems as though everyone is talking about generative artificial intelligence models like OpenAI’s ChatGPT and DALL-E—capable of producing realistic writing, photos, audio, and even code—and wondering what they mean for our future. If our intellectual, social, and professional lives are in for major disruption at the hands of this new technology, the logic goes, so, too, are our elections.

Online and on television, pundits stoke the fire. In an interview with CNBC in June 2023, former Google CEO Eric Schmidt predicted that the 2024 elections “are going to be a mess because social media is not protecting us from false generative AI.” Bestselling author Yuval Noah Harari warned that AI is creating a “political earthquake.” The MIT Technology Review forecast in bold text, “Without a doubt, AI that generates text or images will turbocharge political misinformation.” The sentiment is echoed in Washington, D.C., where think tanks like the Brookings Institution host frequent discussions and primers on the topic, exploring “the dangers posed by AI and disinformation during elections.”

Events leading up to November’s presidential election haven’t exactly discouraged these fears. In January, thousands of voters in New Hampshire received robocalls featuring an AI-generated impersonation (better known as a “deepfake”) of Joe Biden; the recording misled voters by indicating that if they voted in the Democratic primary, they wouldn’t be eligible to vote in November’s election. In August, Donald Trump posted a string of misleading (and tacky) AI-generated content on his social media platform Truth Social: a video of himself dancing with Elon Musk, an image of Kamala Harris speaking to an audience of communists, and images suggesting Taylor Swift had endorsed him. (Swift would in fact endorse Harris.)

And then there are the seemingly banal uses of AI in elections that extend beyond the tendrils of deepfakes. The MAGA-backed software Eagle AI empowers subscribers to generate and submit challenges to voter registration quickly and en masse. Those who want to optimize polling and campaigning are pitched AI analytics products that mimic business enterprise solutions: “Lead with AI-powered voter data and intelligence to expand margins and win elections,” reads a description of one such offering from the tech company Resonate. GoodParty.org’s AI campaign manager similarly offers “powerful AI tools [that] help refine your strategy, find volunteers, create content, and more.” These innovations can be traced back at least as far as Cambridge Analytica: A whistleblower reported that, before the company became mired in scandal in 2018, it had been experimenting with AI for advertisement personalization in order to maximize voters’ engagement with its clients’ campaigns.

It’s entirely possible that AI may somehow disrupt the election (and elections in the future). But what if the larger threat isn’t AI itself but all of the attention surrounding it?

Silicon Valley, rather than merely trying to evade accountability around AI—a tack that would be more in keeping with its historic resistance to regulation of any kind—has appeared eager to make commitments about the election. In February, a host of major tech companies, including OpenAI, Meta, Google, and Microsoft, signed the “Tech Accord to Combat Deceptive Use of AI in 2024 Elections” at the Munich Security Conference, advancing seven primary goals: prevention, provenance, detection, responsive protection, evaluation, public awareness, and resilience. Many of the same companies have detailed concrete plans to restrict certain types of prompts from eliciting responses from generative AI models, to label certain AI-generated content appearing on platforms, and to watermark AI-generated content via computational processes that make it possible to determine digital authenticity by consulting the file directly.

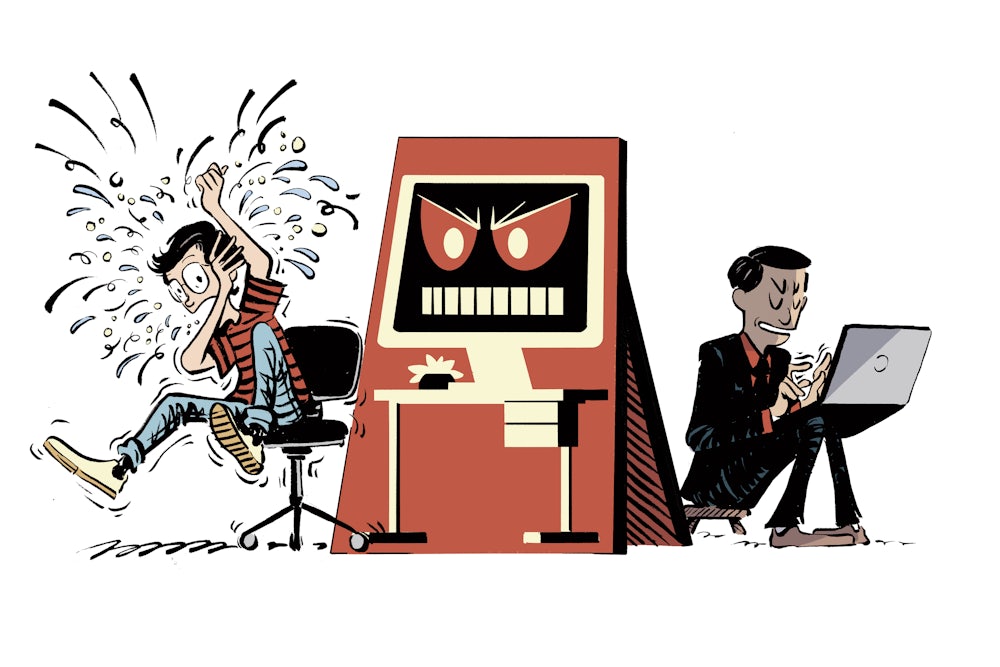

Silicon Valley is stressing technical solutions, in other words. The AI Elections Accord itself asserts that AI “offers important opportunities for defenders looking to counter bad actors” and argues that “it can support rapid detection of deceptive campaigns, enable teams to operate consistently across a wide range of languages, and help scale defenses to stay ahead of the volume that attackers can muster.” However, despite the commitments made by the companies, critics of these efforts have pointed out that there are no clear accountability or enforcement mechanisms listed. Instead, they eschew blanket regulation and offer attempts at remediation through even more AI. It is a cat-and-mouse game that, in both creating the problem and offering the solution, benefits the purveyors of the technology most.

The tepid interventions proposed by these companies to combat the exacerbation of disinformation via their generative AI products come in response to growing skepticism from both the left and right. Under Joe Biden’s administration, the Department of Justice and the Federal Trade Commission have pursued antitrust suits against tech companies such as Google, Meta, and Amazon, while conservatives have increasingly called for regulation of the industry. These companies are saying the right things in the hope of reducing the likelihood of controversy without actually doing anything meaningful to change their platforms.

Of course, some Silicon Valley elites are more cynically minded. X owner Elon Musk has embraced the far right, disregarding, welcoming, and even participating in the spread of disinformation and hate speech on his platform. Experts have pointed out that actions such as Musk’s are more damaging than AI-generated content. (X is a signatory of the AI Elections Accord.)

The United States has enacted some policy, albeit slowly. The Federal Communications Commission banned AI-generated voices in robocalls earlier this year, and House Democrats have begun introducing bills like the REAL Political Advertisements Act, which would require “a communication (e.g., a political advertisement) to include, in a clear and conspicuous manner, a statement if the communication contains an image or video footage that was generated in whole or in part with the use of AI.” The Securing Elections From AI Deception Act, meanwhile, would “prohibit the use of artificial intelligence to deprive or defraud individuals of the right to vote in elections for public office, and for other purposes.”

“This commonsense legislation would update our disclosure laws to improve transparency and ensure voters are aware when this technology is used in campaign ads,” said Amy Klobuchar about the REAL Political Advertisements Act, which she introduced with fellow Democratic Senators Cory Booker and Michael Bennet. Legislatures in New York, Florida, and Wisconsin, among others, have been successful in introducing and enacting similar bills. Until the policy is officially enacted into federal law, however, agreements such as the AI Elections Accord, which lack accountability mechanisms, have no teeth.

Consider also the discursive benefits to Silicon Valley’s efforts surrounding generative AI. Broadly speaking, they deflect attention from other fraught practices. Earlier this year, Signal Foundation president and AI Now Institute co-founder Meredith Whittaker argued on X that “the election year focus on ‘deep fakes’ is a distraction, conveniently ignoring the documented role of surveillance ads--or, the ability to target specific segments to shape opinion.” Moreover, any heightened sense of generative AI as a threat ultimately bolsters the power of the companies leading the charge on research and development: It suggests that the technology is so impressive as to be capable of such dangerous outcomes in the first place. The sentiment recalls the longtime discourse surrounding AI’s existential risk, a historically fringe though growing alarmist theory that forecasts AI’s rise as a possible extinction event. More directly, the recent wave of announcements surrounding commitments to election integrity simply makes for handy headlines that divert talk of the election back to AI and those who market it.

Despite the press attention surrounding Trump’s novel weaponization of deepfakes, it is significant that most of his statements that have dominated media cycles since the rise of generative AI have involved his old habit of simply making things up. After all, it’s not as if Trump’s use of generative AI to suggest that Taylor Swift had endorsed him was his first lie to gain traction. And when Trump took the debate stage against Harris, he relied on his tried-and-true method of uttering real words on live TV to spread despicable, fearmongering lies about Haitian immigrants.

Without question, we live in a moment in which generative AI is largely unregulated and ripe for abuse; it is a powerful technology that undoubtedly has the potential for real and material impact. However, claims of what generative AI could mean for elections are much like most claims in the age of AI: above all else, speculative.

Existential fears and salvational hopes surrounding technology and democratic elections are nothing new; just consider the attention given to televised debates, digital ballots, and online political advertising when they were introduced. It was just 15 years ago that the social media platform formerly called Twitter was hailed as the catalyst of the Iranian presidential election protests. Technological advancements not only shape elections but do so in unexpected and significant ways; suggesting otherwise would be to ignore how society and technology are fundamentally enmeshed. Yet, observing the hyperbole in statements of the past is an opportunity to pause and reflect on the fears of the present.

On the demise of the White House’s Disinformation Board in 2022, writer Sam Adler-Bell detailed in New York magazine both the liberal emphasis on disinformation and the preoccupation with quantitative interventions. In Adler-Bell’s mind, this obsession “turned a political problem into a scientific one,” and to many Americans, “the rise of Trump called not for new politics but new technocrats.” Two years later, generative AI reigns supreme in the minds of investors, pundits, politicians, and the public alike. It comes as no surprise that November’s election brings with it increased attention on the defining technology of our times. Once again, we find ourselves confronted with a technocratic vision of an election. In this reductive fantasy, democracy itself is threatened by AI and will be saved by those in Silicon Valley who understand it—those who can come up with the right technological solutions. Perhaps, given that there are so many other issues constituting the national discussion, we’d be better served to just remind ourselves that a presidential candidate is knowingly posting AI-generated deepfakes—and then keep our focus trained not on the deepfakes, but on him.