Over 35 years ago now, as the Soviet Union teetered on the brink of collapse and President George H.W. Bush worked to secure a peaceful conclusion to the Cold War, the political scientist Francis Fukuyama infamously declared the “end of history.” “What we may be witnessing is not just the end of the Cold War,” Fukuyama writes in the pages of The National Interest, “but the end of history as such: That is, the end point of mankind’s ideological evolution and the universalization of Western liberal democracy as the final form of human government.”

Yet, as one student in my large survey course remarked during our discussion on Fukuyama’s essay this fall, “History is still gonna go on.” Indeed, historians such as Kathleen Belew have shown that the ostensible triumph of “Western liberal democracy” over “Soviet-style socialism” brought little comfort to Americans, many of whom had been conditioned to expect a nuclear apocalypse at the hands of what Ronald Reagan called the “Evil Empire.” Some, especially those who ran in white-power and far-right circles, worried that an increasingly powerful federal government would supplant the USSR as the principal threat facing ordinary Americans.

The domestic disorder and dysfunction of the early 1990s lent at least some credence to these fears. Ruby Ridge and Waco seemed to reveal the incompetence and callousness of federal law enforcement agencies, while the Rodney King beating, the Latasha Harlins murder, and the Los Angeles uprising suggested that the United States still had a long way to go to fulfill its promise as a multiracial democracy. In these years, the HIV/AIDS crisis raged, as did the culture wars, kindled by the incendiary rhetoric of Pat Buchanan, Rush Limbaugh, and Jesse Helms.

Over the past several years, some scholars and public intellectuals have discovered the roots of our tumultuous present in this “end of history” moment. Nicole Hemmer, for one, has argued that American right-wingers increasingly jettisoned Reagan’s sunny optimism in favor of a “pessimistic, angrier, and even more revolutionary conservatism” in the late 1980s and early ’90s. More recently, John Ganz has spotlighted the many “con men” and “conspiracists” of the period who, he claims, prefigured the full-fledged debut of Trumpism a decade ago.

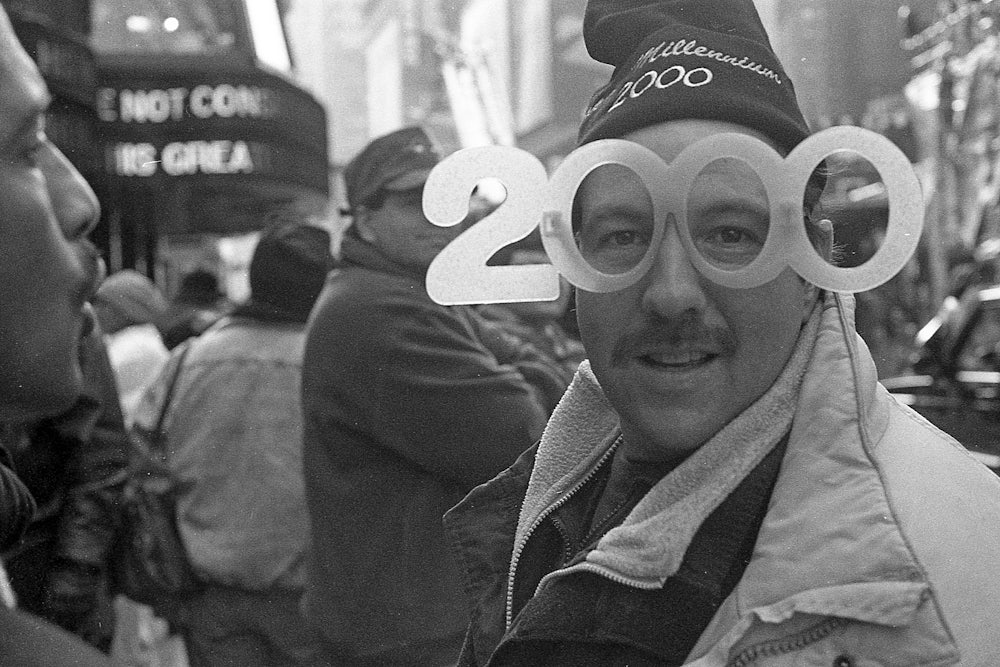

In her compact, yet powerful new book, Colette Shade examines a later period within the broader “end of history” moment, which she terms the “Y2K era.” Beginning in 1997 with the introduction of Netscape Navigator—a pivotal moment in the career of the internet—and ending with the onset of the Great Recession in 2008, the Y2K era seemed to bear out Fukuyama’s “end of history” thesis, Shade notes. Its rampant techno-optimism, evidenced by the dot-com bubble of the late ’90s and early 2000s, joined with hyper-consumerism and a steadfast belief that “the West” had transcended politics to forge an “ecstatic, frenetic, and wildly hopeful” decade.

Yet as Shade persuasively demonstrates, this hopeful energy masked not only the failures of global capitalism—which would be exposed by the activists at the 1999 Battle of Seattle and later by the economic collapse of 2007–2008—but also the political vacuity and cultural rot of the period. Far from a savvy and innovative political mode, the conscious centrism charted by Bill Clinton simply intensified the punitive, deregulatory, and market-centric policies that had reigned for much of the 1970s and ’80s. And the futuristic bubblegum glam of Y2K pop culture—nostalgia fuel for the likes of Charli XCX and Troye Sivan—reflected and advanced the profound misogyny and fatphobia of the period. We are still living with the consequences.

At the dawn of the Y2K era, the possibilities seemed endless. The fall of the Soviet Union had consecrated a global economic and political order that paired capitalism and liberal democracy. “Free markets” and “free societies” went hand in hand, the thinking went, and accordingly “globalization” became the watchword of the period. The free flow of capital, goods, and ideas across borders and continents would open up closed societies and create a more peaceful, more stable, and more prosperous world.

Nafta, the controversial free trade agreement negotiated and signed into law by the outgoing President Bush in December 1992, would facilitate this process through the abolition of certain restrictions inhibiting trade between the United States, Canada, and Mexico. Across the Atlantic, the Treaty of Maastricht, signed earlier in 1992, established the European Union, which would operate on free market principles.

Thomas Friedman, a columnist at The New York Times, captured the prevailing political and economic mood with his infamous Golden Arches theory. First publicly articulated in a 1996 column in the Times, the theory went something like this: No two nations with McDonald’s restaurants have ever gone or will ever go to war with one another. “People in McDonald’s countries don’t like to fight wars,” Friedman argued. “They like to wait in line for burgers.”

Many observers also believed that the growing popularity of home computing and the internet would break down economic, political, and cultural barriers between populations across the globe, thereby enriching all parties involved (in multiple senses of the word). Undergirded by information and tech, boosters of the so-called New Economy promised unlimited, unfettered growth. The introduction of the internet into American households during this period facilitated the development of the dot-com bubble, which rested on flawed valuations of dubious start-up websites such as Pets and eToys. “It seemed like the stock market would keep going up forever and ever,” Shade reminds us, “and everyone would be rich if they could just get in on the action.”

The dot-com crash of 2000 put a damper on some of this wild-eyed optimism, but for many observers in the United States, the dream of peace and prosperity at the end of history endured until 2008. With the onset of the Great Recession, the hope that so many felt during the Y2K era turned to despair. That despair only deepened as a dynamic and wildly successful presidential candidate vowing to foment “hope” and “change” filled his Cabinet with some of the same Clintonites who had actually engendered the economic crisis then enveloping the world. Ultimately, Barack Obama’s failure to deliver the peace, prosperity, and stability he had promised further disillusioned so many Americans—especially millennial lefties such as Shade and me.

“To tell the story of the Y2K era is … to tell the story of the millennial left during the decade that followed,” Shade provocatively writes near the end of her book. Out of the dejection and disillusionment of the Clinton, Bush, and Obama years erupted “Occupy Wall Street, Jacobin, the Bernie campaign, Chapo Trap House, the Democratic Socialists of America,” Black Lives Matter, the #MeToo movement, “the increasingly visible trans rights and disability rights and immigrant rights movements,” Standing Rock, “Starbucks unions and the movement for climate justice.”

In other words, the Y2K era and its Obama coda radicalized Shade and millions of others. As historian Keeanga-Yamahtta Taylor explained in 2016, it was no accident that Black Lives Matter, for one, emerged during the second term of the nation’s first and only African American president. “How did we get from the optimism of the Obama presidential run to the eruption of a protest movement calling itself ‘Black Lives Matter’?” asked Taylor. “Perhaps the optimism itself is to blame, or rather the contrast between Obama’s promise and the reality of his tenure.” As Shade puts it, “A generation raised to believe that history’s biggest questions were settled felt that they had been lied to.”

Those lies took many different forms, Shade illustrates in her wide-ranging and tremendously compelling book: from the belief in the emancipatory power of consumption to the conviction that thinness was next to godliness, to Americans’ seemingly unwavering faith in U.S. hard and soft power.

It’s impossible to contemplate the Y2K era and all its boosterism without also weighing the effects of the terrorist attacks of September 11, 2001, and the “war on terror” that followed. In what might be her most controversial argument, Shade dismisses “the cliché that 9/11 marked a new phase in American history, policy, and culture.” The U.S. “did enter a new chapter,” she contends, “though it was part of the same book,” as President George W. Bush encouraged Americans to double down on the same habits that had defined the late ’90s. “Get down to Disney World in Florida,” Bush implored the public. “Take your families and enjoy life, the way we want it to be enjoyed.” A good American was a good consumer—or, in Shade’s words, “Shopping was … the most important part of citizenship.” In that vein, the post-9/11 compulsion to purchase and display American flags—“many made in the same Chinese, Bangladeshi, and Indonesian sweatshops as other American goods,” Shade recalls—seamlessly blended the well-worn American pastimes of materialism and jingoism.

The decision to shift the focus away from September 11 as a rupture point puts Y2K at odds with recent (and excellent) books by Richard Beck and Spencer Ackerman. Beck and Ackerman both argue that the 9/11 attacks set the country on a fundamentally new course, which would ultimately foster the political malaise and uncertainty on which Trumpism depended and continues to depend. The subtitle of Ackerman’s book is How the 9/11 Era Destabilized America and Produced Trump, while in his account, Beck hypothesizes that “if September 11 had not occurred, Donald Trump could never have become president.”

While the destabilizing effects of 9/11 cannot be overstated, there’s also something to be said for Shade’s examination of the preceding years, which encourages readers to look at the forms of capitalism, consumerism, and chauvinism that were already in play. “9/11 never challenged” the assumptions on which the Y2K era rested, Shade insists. “What defined the Y2K Era,” she writes, “was the unquestioned rule of American-led global capitalism, which began after the Cold War ended in the early ’90s and the dot-com bubble inflated in the late ’90s.” Only with the arrival of the Great Recession in 2008 would these assumptions break apart, Shade argues.

The “American-led global capitalism” at the heart of the Y2K era relied on both U.S. hard power—on full display during the decades-long “war on terror”—and the “soft imperialism” of “American pop culture,” which enticed “people around the world to embrace an American-led economic system.” But as Shade details, Y2K pop culture—and particularly its more judgmental and sinister elements—also had a profound impact on the lives of young people stateside. In the book’s most heart-wrenching chapter, Shade recalls how the thin-obsessed fashion magazines of the early 2000s caused her to develop an eating disorder. (She productively ties the era’s fatphobia to a neoliberal and meritocratic obsession with risk and metrics: “weight, money, grades, statistics.”)

In this moment, vultures like blogger and provocateur Perez Hilton simultaneously preyed on and propped up Lindsay Lohan, the Olsen twins, Britney Spears, and Paris Hilton, among other overexposed celebrity teens and young adults. Subject to constant scrutiny, harassment, and judgment, these young women understandably struggled to “contain all the contradictions of fame and wealth and femininity,” Shade observes, which led to very public outbursts and breakdowns, the lifeblood of the “Y2K-era fame ecosystem.”

This ecosystem collapsed along with the housing bubble and the global economy more generally, Shade argues. The party was over, although a new party—this one hosted on social media—was already underway. Today, Shade notes, “we have all been conscripted into influencer-dom, which is to say fame. We are all micro celebrities.” Just as the Y2K-era thin ideal helped cause millions of eating disorders, and just as the “fame ecosystem” chewed up and spit out so many young stars in the early 2000s, the post-Recession rise of “influencer-dom” has exacerbated body image issues among American youth and fundamentally reshaped the social landscape—for better or worse.

“From 1997 through 2008, we lived dishonestly,” Shade explains in her conclusion. “We dreamt we were ascending into the future, leaving history and all its complications behind on the ground. Up and up we went into the sky. Now everyone could get rich in the stock market, drive a Hummer, own a McMansion.” But, “in 2008, we awoke in our beds, drenched in sweat.”

In the years since, we’ve tried desperately to keep the dream alive. Obama bailed out and sought to rehabilitate the very financial institutions that had immiserated millions of Americans (much to the chagrin of those who occupied Zuccotti Park during his first term). Donald Trump, whose electoral base is the “American gentry,” has often promised to deliver prosperity to those left behind by globalization and neoliberalization.

But it’s unclear who truly believes in the viability of the American dream or the American project, more broadly. In a Gallup survey taken last month, only 19 percent of Americans indicated that they were satisfied “with the way things are going in the U.S.” The last time a majority of Americans expressed satisfaction with the direction of the country was December 2003—right in the middle of the Y2K Era.