Google Snubs EU Law Over Fact-Checking YouTube Videos

Google announced it would pull out of the EU’s anti-disinformation code of practice.

Google announced its intention Thursday to flout European Union standards for digital fact-checking, opting not to build an internal department to moderate and verify YouTube content despite requirements from a new law.

The European Commission’s Disinformation Code of Practice has remained a voluntary policy, leaving the ball in the tech industry’s court. Companies that opted in to the code—including Meta, Microsoft, TikTok, and X—are supposed to self-regulate while submitting reports on their platform’s compliance with the code.

But a 2024 study published in the Internet Policy Review found that, by and large, companies were “only partly compliant” with the EU code, with reports data lacking detail and offering “missing, incomplete, or not robust” data. The EU has since urged companies to convert the voluntary guidelines into an official policy under the union’s newer content moderation law, the Digital Services Act of 2022.

Google has never had a fact-checking department to oversee content on YouTube, where users reportedly upload more than 500 hours of video content every minute, and on average consume a collective one billion hours of content per day, according to YouTube’s blog. The law would require Google to build fact-checking into its search function, its ranking systems, and its algorithm, as well as adding fact-checked results alongside YouTube videos.

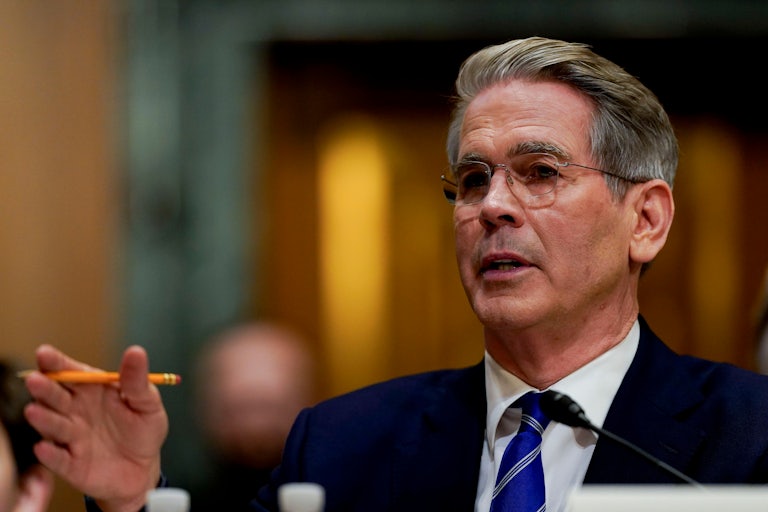

The search engine behemoth’s global affairs president Kent Walker rejected the mandatory new standards in a letter to deputy director general Renate Nikolay, claiming that the code “simply isn’t appropriate or effective for our services,” according to Axios. Walker instead pointed to a new feature that was implemented on YouTube in 2024, allowing users to communally verify information themselves, akin to X’s “Community Notes.”

Google will “pull out of all fact-checking commitments in the Code before it becomes a DSA Code of Conduct,” Walker wrote.

But Google isn’t the only company skirting its disinformation commitments. Meta and X have heavily reduced their content moderation policies, allowing disturbing language to circulate openly on their platforms.

Earlier this month, Meta CEO Mark Zuckerberg announced that the social media company would rid itself of its third-party fact-checkers, opting instead to replace them with user-generated corrections.

“Fact-checkers have been too politically biased and have destroyed more trust than they’ve created,” Zuckerberg said in a video announcement posted to Facebook. “What started as a movement to be more inclusive has increasingly been used to shut down opinions and shut out people with different ideas, and it’s gone too far.”

In the background of their internal decisions, a cohort of Silicon Valley’s most successful figures have donated millions to Donald Trump’s inaugural fund, seemingly caving to the incoming forty-seventh president in an apparent bid to make Trump’s second term as friendly to their massive tech and AI corporations as possible.